This post may contain affiliate links, meaning at no additional cost to you I may earn a small commission when you click a product or company link. As an Amazon Associate I earn from qualifying purchases.

You’ve probably seen the posts going around social media about the horrible content that’s cropping up in seemingly innocent videos for kids on YouTube. A child will be watching a cartoon when suddenly the content turns darker–it may be the cartoon characters themselves engaging in violent or sexual behavior, or it may be a scary scene spliced into the middle of the video after parents have stopped watching.

If you haven’t encountered this content yourself, you may be wondering 1) is the hysteria true? and 2) if it is true, is YouTube still a safe place for my kids? I’m going to answer both of those questions with yes, and no.

I recently went live on Facebook with some more information on this topic. You can watch that here and/or read on.

Table of Contents

Let’s do some fact checking

First off, yes, there is weird and scary content on YouTube targeted at kids. James Bridle gives a fantastic explanation of this content in his article (long but definitely worth a read) and TED Talk, but here’s a quick overview.

Related: 15 Outstanding TED Talks That Will Make You a Better Parent

The content generally comes from two different places. The first is randomly generated content–computerized algorithms mash together popular YouTube search terms to create weird, random videos that will show up in search results.

These strange, random videos generate income for their creators as children view them while navigating YouTube on their own or the videos end up in YouTube autoplay queues.

The second kind of content is more devious in nature. People will take videos that look innocent–for example, an old Disney cartoon or a full Peppa Pig episode–and somewhere in the middle of the video, after parents have lost interest, the video creators will splice in inappropriate content to scare or harm children.

Need tips for talking to your kids about staying safe online? Check out these Resources for Talking to Your Kids about Internet Safety.

Image source: James Bridle’s article on Medium

There is a third kind of content where video creators will make their own renditions of popular video content, sometimes inappropriate and sometimes not. I’m going to lump this in with the second kind.

Let’s talk about the Momo Challenge

Update 3/5/2019: We’re now hearing several reports that Momo itself was a hoax, but since there are still videos with unexpected, inappropriate content for children, the majority of this information is still relevant.

The most recent example of the second kind of content that I’ve seen reports of on Facebook is the Momo Challenge. It’s a little difficult to separate fact from hype here (as is often the case on the Internet), but the basic history is something like this: it supposedly started as a “challenge” on WhatsApp.

Related: What Happened When I Deleted the Facebook App

People who wanted to participate in the challenge would message a special account on WhatsApp, and “Momo” would respond. (The face used to represent Momo comes from a sculpture created by a Japanese design studio. I’ll spare you the picture because it’s weird and creepy.) Momo would then give participants challenges that ranged from harmless–like getting up at a certain time–to extremely harmful–like committing suicide.

Now, we’re hearing that this so-called challenge has spread to YouTube, and children are seeing the frightening head pop up in the middle of their videos and tell them to do terrible things. Apparently it also threatens to hurt them and their families or friends if they don’t comply.

Are the YouTube videos being hacked?

Update 3/5/2019: Even though the Momo hysteria was manufactured, this section is still true. YouTube was not hacked, and here I explain why it is highly unlikely it will be hacked in this way in the future.

Now, here’s where it gets really murky. I think some clarification is needed. A couple of reports on the Momo Challenge make it sound like people were hacking into videos that had already been uploaded to YouTube and modifying the videos so that Momo was a part of the video.

I do not believe that is true, and here’s why. YouTube surely employs a team of computer security professionals. It is their job to make sure hackers don’t get into their systems and modify videos. Videos are YouTube’s lifeblood. Anything that jeopardizes the integrity of those videos would destroy their whole business if allowed to continue.

Yes, code is imperfect, and yes hackers take advantage of these flaws. But a hack of this magnitude would already be all over the major news centers and would be huge news in the professional computer security circles. And YouTube would fix it fast.

I searched a couple of the sites that report on computer security issues. The most recent YouTube vulnerability reports were from 2015–one was for a bug that allowed people to put fake comments on their videos, and one was for a bug that allowed you to delete any video (even ones you hadn’t created). Nothing about being able to edit videos on the site.

What, exactly, is happening

I’m so glad you asked! OK, so YouTube videos are arranged into channels, right? It’s like your account on YouTube. So when I upload a video, it goes into my channel. When Nick Jr. uploads a Paw Patrol video, it goes into their channel, and when Joe Weirdo uploads a video, it goes into his channel.

What I suspect is happening is that the people creating this content are pirating videos that they know parents search for on YouTube (Peppa Pig, for example). They would modify these videos on their own computers, splicing in the inappropriate content before uploading them to their own YouTube channels.

The giveaway is that these videos will appear on unofficial, non-legitimate channels. So Joe Weirdo’s pirated Peppa Pig video would appear on his channel instead of on the official Peppa Pig channel.

How can I tell if a video is coming from a legitimate YouTube channel?

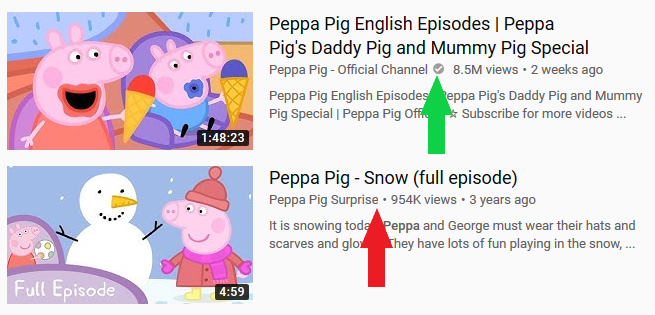

One way to make sure a video is not pirated is to look for the verification badge next to the channel name. Here’s an example from YouTube search results for “Peppa Pig.”

The first example has a checkmark next to the channel name. The second is a ripped video with no checkmark next to the channel name. That checkmark indicates the channel owners have verified their identity with YouTube.

Note that the fact that “Official Channel” is in the name does not necessarily mean anything. Anyone can say they’re official. But the checkmark means YouTube has actually verified it.

Also note that when you hover over the checkmark, a label pops up that says “Verified.”

What can I do to keep my kids safe?

You have a few options. You could swear off YouTube altogether, which is a perfectly appropriate course of action. Maybe you want to use a different video service–check out these free YouTube alternatives for some options. Or maybe you want to tell your kids to go outside or read a book instead.

If you want to keep using YouTube, here are some tips for making it safer for your kids.

- When you want to let your kid watch a video, make sure it’s coming from the official channel for that video.

- Watch it on your television with autoplay off. If you pick a single video and then the playing stops when the video is over, there’s no risk of your child going down the autoplay rabbit hole. Watching it on the television screen (without access to a remote!) means they can’t just touch the screen to navigate to a new video.

- Use the YouTube Kids app with strict parental control settings. Not even the YouTube Kids app is completely safe, due to the fact that the filtering process is computerized. But strict parental controls can go far. I go into more detail in this post on how you can make the YouTube Kids app safer for your kids.

- If at all possible, always be present when your children are watching YouTube. I know sometimes this doesn’t happen, but it’s generally a good idea to be in the room. Bedrooms, especially, should be off limits.

Related: How to Create Screen Time Rules That Work

No solution is going to be completely fail-proof. You still have to think about issues like whether or not you agree with the philosophies presented in the videos your kids watch.

Ads are still an issue–they’re less blatant in the YouTube Kids app, but still present. But if you’re aware of the concerns and actively engaged in what your kids are exposed to, YouTube can still be a safe place when you take appropriate cautions.

Thinking about reducing screen time? Take a look at this post.

Did you find this post useful? Please consider sharing with someone who could use this information.